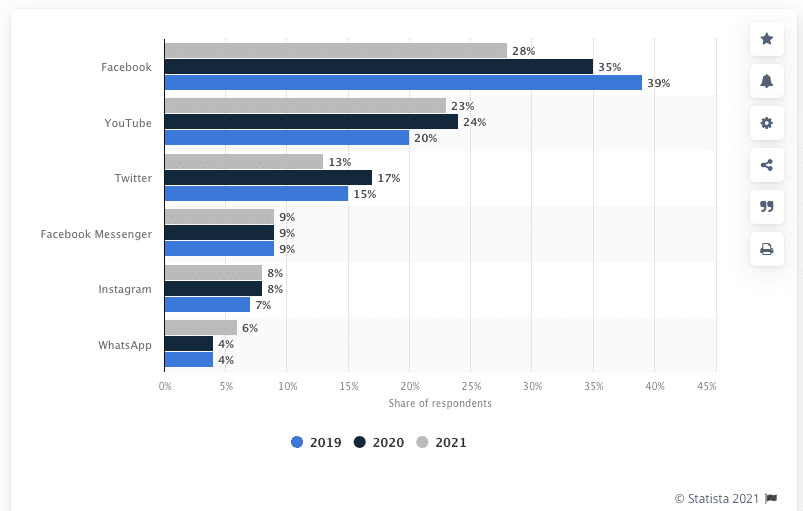

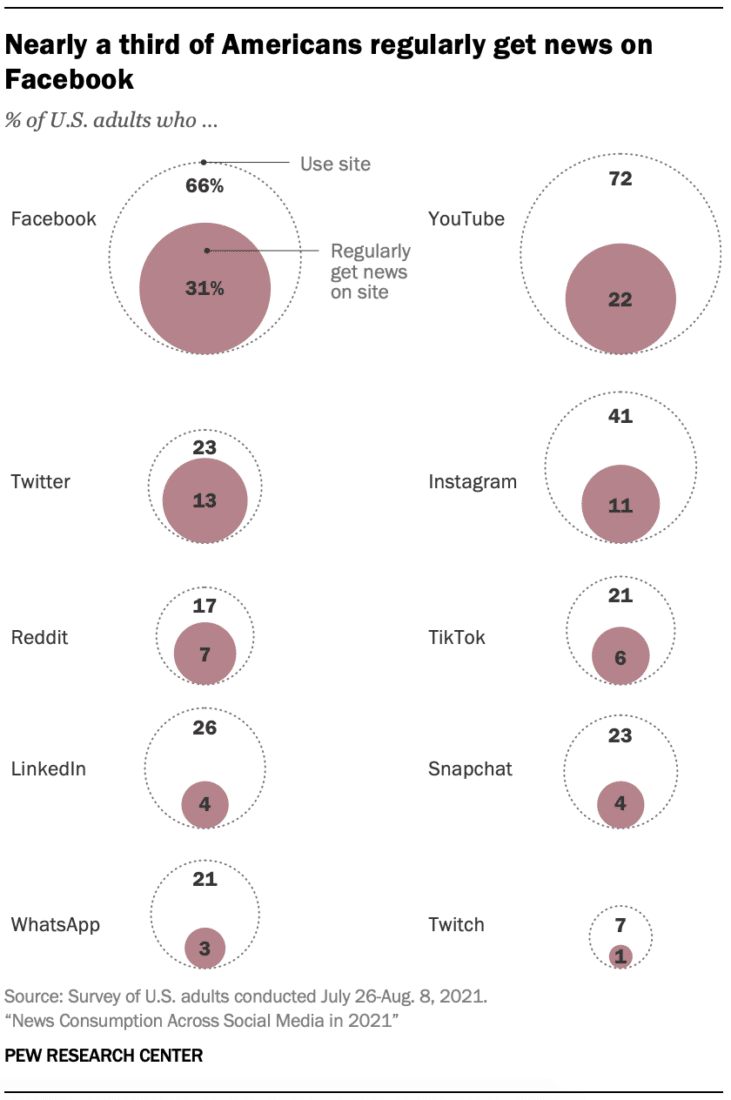

Facebook and YouTube remain the top sources of news consumption although Twitter and Instagram are becoming increasingly popular. How are the social media giants faring in flagging disinformation?

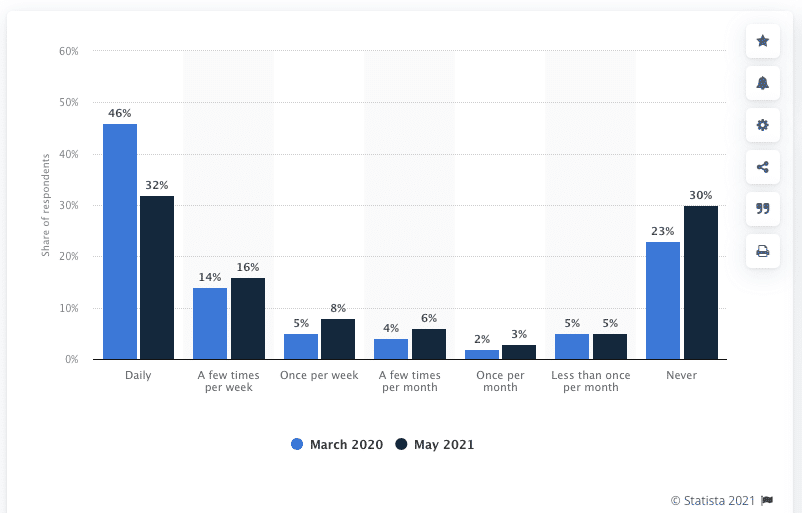

“Consider the source” is a phrase that is weighed by many a wise person when reading anything on social media. Or at least it should be. In 2021, the amount of time people spent on social media increased to 2 hours and 24 minutes per day. Globally there are 4.48 billion active users (240 million in the U.S. alone) on social media and close to 48% of U.S. adults use social media for their news.

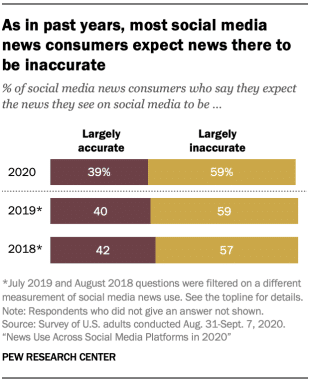

Facebook, YouTube, Instagram and Twitter have become mainstream sources of information that are filling the news vacuum of more traditional outlets that have slowed publishing or shuttered publications entirely. However, 40% of Americans think that the news vacuum is rife with disinformation.

Misinformation is defined as the unintentional publication of inaccurate information. Disinformation, on the other hand, is the intentional publication of inaccurate information. The terms differ considering the intent of the source. Since intent can be hard to prove, these two terms generally are used interchangeably. Another term frequently used is fake news, or news that contains misinformation.

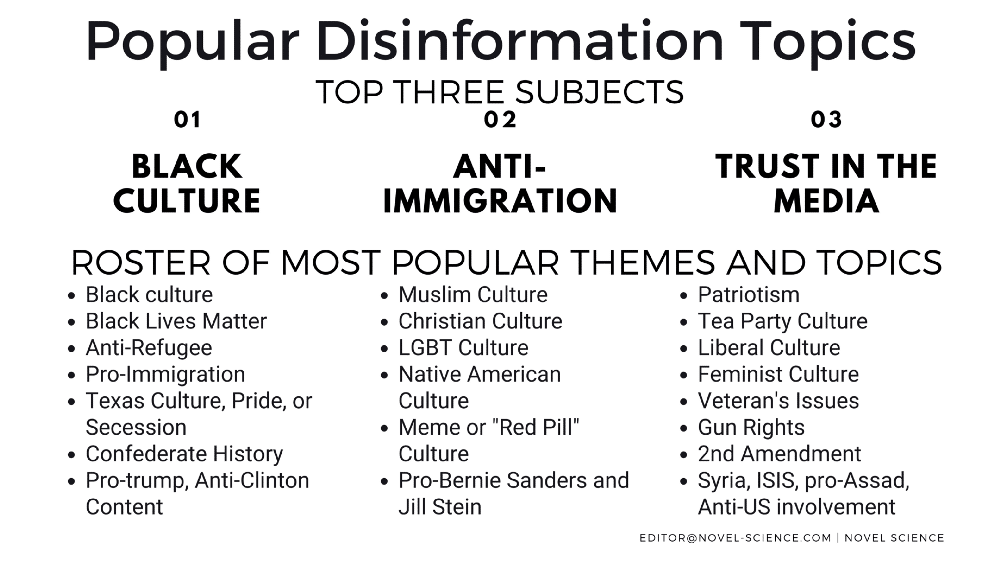

Social media platforms use an attention-driven business model to make money on its users’ scrolling via paid advertising. Their algorithms measure what users engage with and then feed more content like it. It’s these algorithms, then, that automatically recommend and amplify user preferences. This is where disinformation has become a problem.

Down the Rabbit Hole

“As humans, we evolved to respond more strongly to negative stimuli than positive ones,” according to Time. “These algorithms detect that and reinforce it, selecting content that sends us down increasingly negative rabbit holes.” It is these features of the social media platforms—aggregation, algorithms, anonymity and automation—that contribute to the spread of disinformation online.

Social media users can engage with news in a variety of ways such as consuming; discovering; sharing and/or reposting; and posting first-person accounts of news and commentary.

According to Statista, below is the media usage in an online minute in 2021:

- Hours streamed by YouTube users: 694,000

- Views received by Facebook Live: 44M

- Photos shared by Facebook users: 240,000

- Photos shared by Instagram users: 65,000

- Tweets posted by Twitter users: 575,000

- Videos watched by TikTok users: 167M

- Posts shared by media industry on Facebook per week: 53.5

Due to the speed of the information published and consumed on each of these social media platforms, disinformation flows quickly and frequently into users’ social feeds. Moreover, the very design of social media technologies enhances the speed, scale and spread of disinformation.

Choose Your Own Facts

Americans who mainly get their news on social media tend to be less engaged and less knowledgeable. In an analysis by Pew Research, half of social media users reported the following:

- One-sided news (83%)

- Inaccurate news (81%)

- Censorship of the news (69%)

- Uncivil discussions about the news (69%)

- Harassment of journalists (57%)

- News organizations or personalities being banned (53%)

- Violent or disturbing news images or videos (51%)

Common Sense Media conducted a 2020 nationally representative survey of American teens (ages 13 to 18) that found that the most common way teens consume news was from personalities, influencers, and celebrities followed on social media or YouTube (39%). For instance, the most commonly mentioned sources on social media included PewDiePie, Trevor Noah, CNN, Donald Trump, and Beyoncé.

The Expanding Spread (and Speed) of Disinformation

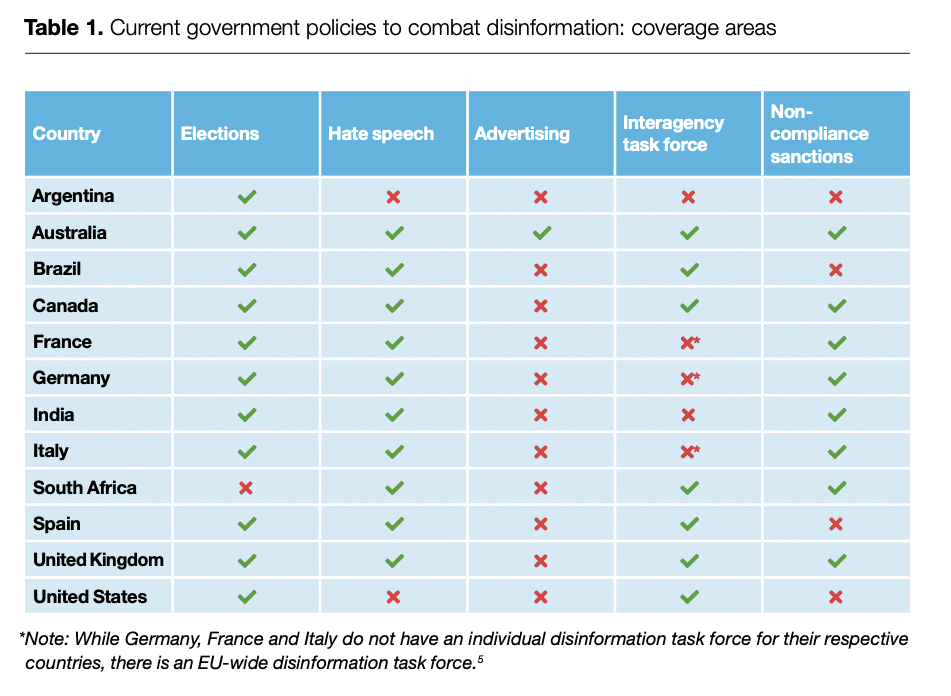

While different countries have taken a variety of approaches to address the spread of disinformation, it is cited by the Global Disinformation Index as a global problem not contained by borders. They state further that “the online ecosystem that encourages and financially rewards the creation of harmful content is a worldwide phenomenon.”

Some nonprofit organizations that rank and report social media sites include the Global Disinformation Index, First Draft, Pew Research and Statista. These groups point out that while many of these platforms have the control and technology to prevent disinformation and hate content from spreading, the responsibility to restrict the spread of disinformation falls mostly on the user.

Statista has found that sharing fake news is relatively common among users and “while savvier and more responsible news audiences refrain from passing on content they know to be false, this is not the case for everyone.” In fact, 10% of users admit to have knowingly shared fake news with others. In addition, as Statista notes, this only exacerbates the problem.

The most popular social media platforms in the United States are YouTube at 81.9% and Facebook at 73.4% among internet users aged 16 to 64. Both of these social media sites rely on users to flag or report disinformation. The main educational tactics they promote in combating the spread of disinformation are media and digital literacy. Media organizations point to critical thinking as one of the key skills for users to have when fact-checking information.

Some ways users can verify if information online is true:

- Check whether the headline accurately reflects the rest of the story.

- Search online for keywords to see if the story ranks among top searches.

- Read how other media organizations have reported the story.

- Go back to the original source of the information.

- Use a fact-checking website.

- Talk to friends or family to check whether they have heard of the story.

- Do some research on the media organization that has reported the story.

- Check the publication date.

- Read the comments.

- If on social media, check the profile of the person who shared the story.

- If on social media, check how many people have shared or liked the story.

The Implied Truth Effect

As we know, there is no silver bullet to stop the flow of disinformation. In other words, content moderation and fact-checking (tags, flags, etc.) have limited effect and potentially damaging unintended consequences. User education and awareness are a big, but not the only, part of the solution. According to MIT research, “When only some news is labeled as fact-checked and disputed, people believe stories that haven’t been marked as fact-checked more, even when they are completely false.” This is referred to as the “implied truth effect.” If a story is not overtly labeled as false when so many others are labeled as false, well, then it must be true.

So what are the leaders—Facebook, Google and Twitter—doing to stop misinformation?

In 2020 Facebook (which owns Instagram) reported removing “over 14 million pieces of content that constituted misinformation related to Covid-19 that may lead to harm.” The Australian government has created disinformation and misinformation industry code, developed by the Digital Industry Group. This code subsequently prompted Facebook to provide training material to influencers concerning false information and seeking advice from experts.

The industry code includes:

- Removing disinformation (in line with our Inauthentic Behaviour policy) and misinformation that can cause imminent, real-world harm

- Paying independent, expert third-party fact-checkers to assess content on our services and, if they find it to be false, to make significant interventions to limit the spread of that misinformation

- Promoting authoritative information and labeling content to provide greater transparency to users

- Encouraging industry-leading levels of transparency around political advertising

- Building a publicly available live dashboard that allows anybody to publicly track and monitor Covid-19 public content on our services

- Making CrowdTangle, a public insights tool from Facebook, freely available to journalists, third-party fact-checkers, and some academics

Critical Thinking

For its part, YouTube has made a commitment to address misinformation on their platform based on the “4 Rs” principle: remove content that violates their policies; reduce recommendations of borderline content; raise up authoritative sources for news and information; and reward trusted creators.

They also ask users to think critically about the content they see on YouTube and the online world so that they can make their own informed decisions. They support this in three ways: help users build media literacy skills; enable the work of organizations who work on media literacy initiatives; and invest in thought leadership to understand the broader context of misinformation.

Twitter also has clearly stated its stance on misinformation, which places the onus on journalists, experts and engaged citizens to flag and challenge false information. This translates into Twitter not combating misinformation directly although it will take action against spammy or manipulative behaviors, particularly when it comes to bots.

Millions of accounts have been suspended for this reason:

Above all, debunking false news and fake claims needs to be a priority for everyone in the world. For example, labeling, fact-checking and reducing the sharing of misinformation are all ways users and platforms can work together to combat misinformation.

It is clear that social media has changed the way the world accesses its news. While considering the source has always been a mantra of many, it is now a mandatory part of discerning what is real news—and what isn’t.

Image by Gerd Altmann from Pixabay

0 Comments